Introduction

Apache Spark is an open-source distributed cluster-computing framework implemented in Scala that first came out in 2014 and has since then become popular for many computing applications including machine learning thanks to among other aspects its user-friendly APIs. The popularity also gave rise to many online courses of varied quality.

In this post, I share my personal experience with completing the Big Data Analysis with Scala and Spark course on Coursera in May 2020, briefly walk through the content and write about the course assignments. I wrote down each of the paragraphs as I went through the course, so it is not a retrospective evaluation but more of a “review-style diary” of the process of completing the course.

Contents

Disclaimer, what to expect

First off, this post does not mean to be an objective review as your experience will most likely be very different from mine. Before this course, I also completed one of the prerequisites - the Functional Programming Principles in Scala course, which I reviewed here.

This is not a paid review and I have no affiliation nor any benefit whatsoever from Coursera or other parties from writing this review.

Course organization, pre-course preparatory work

Organization

The course is organized into video sessions split into 4 weeks, but since it is fully online you can choose your own pace. I completed the course in one week while being on a standard working schedule. Each week apart from week 3 has a programming assignment that is submitted to Coursera and automatically graded.

I found the assignments executed very well from a technical perspective and had no issues at all with downloading, compiling, running, and submitting them.

Similarly to the other courses in the specialization, you can submit each assignment as many times as you want, so there is no stress making the submission right on the first try. Once the course is completed, you get a certificate.

Pre-course setup

Since I have prior experience with Scala and sbt and I already completed a previous course in the specialization, there was no extra setup overhead.

If you are an R user used to conveniently opening RStudio and easily installing packages, you may be surprised by the difficulty of the whole setup. The course does provide setup videos for major platforms, so with a bit of patience, you should be good to go.

Week 1

Content

Very practically introduces Spark, the motivation behind Spark, and comparison to Hadoop, especially for data science type applications and workflows. Presents the main collections class that Spark works with - RDD and provides a very useful comparison between the RDD API and Scala collections API. This builds upon the topics covered in the previous courses, mainly the Functional Programming Principles in Scala course. It also very nicely covers the differences between transformations and actions on RDDs and how that relates to the differences in expression evaluation between the sequential collections and the lazy evaluation of transformations on RDDs.

The content also covers cluster topology, how the driver and worker nodes are related, and what gets executed where. The importance of having data parallelized in such a way that there is little shuffling between the nodes is also highlighted.

I found especially useful the video session on Latency, where the speeds on different operations e.g. referencing memory, reading from disk and sending packets over networks are compared in very understandable terms, which motivates good practices in partitioning data and designing processes to minimize those operations that are time expensive.

This was fantastic content and I binged it in one evening.

Assignment

The assignment is quite fun and practical, the goal is to use full-text data from Wikipedia to produce a very simple metric of how popular a programming language is.

The only issue I had was that a lot of methods that should have been used were only introduced in the content of Week 2, so I had to study their documentation myself to implement the assignment. Had I known that they are introduced in detail in Week 2, I would have watched those sessions first before working on this assignment.

Week 2

Content

Starts with explaining foldLeft, fold, and aggregate. Very good explanations. It would be great to have them for the 1st assignment. Even a structure similar to the first assignment is mentioned along with distributed key-value pairs (pair RDDs), which support reduceByKey.

The later sessions introduce different available joins on pair RDDs, again showing examples, so the concepts are easy to understand. The explanations are very clear and detailed.

Assignment

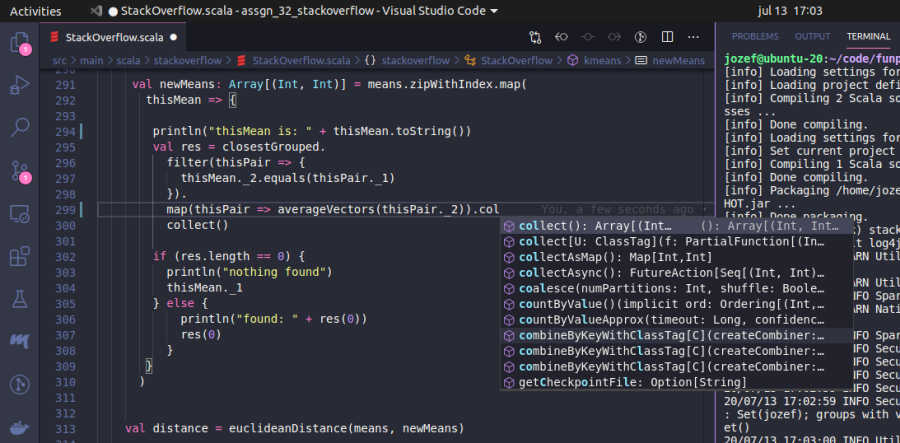

This time the goal is to look at StackOverflow questions and answers data and apply k-means to cluster the content by languages. This was a very interesting and fun assignment.

Implementing it let me appreciate how R is amazing for exploratory and interactive data science work. Compared to R, debugging the Scala code was challenging, and writing data wrangling code to get the data into proper format took me hours.

For a comparison, here is the Scala code I wrote to get the data in requested formats:

val langs = List(

"JavaScript", "Java", "PHP", "Python", "C#", "C++", "Ruby", "CSS",

"Objective-C", "Perl", "Scala", "Haskell", "MATLAB", "Clojure", "Groovy"

)

def langSpread = 50000

val lines = sc.textFile("src/main/resources/stackoverflow/stackoverflow.csv")

val raw = rawPostings(lines)

/** Parse lines into proper structure */

def rawPostings(lines: RDD[String]): RDD[Posting] =

lines.map(line => {

val arr = line.split(",")

Posting(

postingType = arr(0).toInt,

id = arr(1).toInt,

acceptedAnswer = if (arr(2) == "") None else Some(arr(2).toInt),

parentId = if (arr(3) == "") None else Some(arr(3).toInt),

score = arr(4).toInt,

tags = if (arr.length >= 6) Some(arr(5).intern()) else None

)

})

/** Group the questions and answers together */

def groupedPostings(

postings: RDD[Posting]

): RDD[(QID, Iterable[(Question, Answer)])] = {

val questions = postings.

filter(thisPosting => thisPosting.postingType == 1).

map(thisQuestion => (thisQuestion.id, thisQuestion))

val answers = postings.

filter(thisPosting => thisPosting.postingType == 2).

map(thisAnswer => (thisAnswer.parentId.get, thisAnswer))

questions.join(answers).groupByKey()

}

/** Compute the maximum score for each posting */

def scoredPostings(

grouped: RDD[(QID, Iterable[(Question, Answer)])]

): RDD[(Question, HighScore)] = {

def answerHighScore(as: Array[Answer]): HighScore = {

var highScore = 0

var i = 0

while (i < as.length) {

val score = as(i).score

if (score > highScore) highScore = score

i += 1

}

highScore

}

grouped.map{

case (_, qaList) => (

qaList.head._1,

answerHighScore(qaList.map(x => x._2).toArray)

)

}

}

Editing Scala in VS Code

And here is data.table code that can reach very similar results:

library(data.table)

# Read Data -----

so <- fread("http://alaska.epfl.ch/~dockermoocs/bigdata/stackoverflow.csv")

colNames <- c("postTypeId", "id", "acceptedAnswer", "parentId", "score", "tag")

setnames(so, colNames)

# Select questions and answers -----

que <- so[postTypeId == 1, .(queId = id, queTag = tag)]

ans <- so[postTypeId == 2, .(ansId = id, queId = parentId, ansScore = score)]

langSpread <- 50000L

langs = data.frame(

index = (0:14) * langSpread,

queTag = c(

"JavaScript", "Java", "PHP", "Python", "C#", "C++", "Ruby", "CSS",

"Objective-C", "Perl", "Scala", "Haskell", "MATLAB", "Clojure", "Groovy"

)

)

# Merge into final object -----

mg <- merge(que, ans, by = "queId")

mg <- mg[, .(maxAnsScore = max(ansScore)), by = .(queId, queTag)]

mg <- merge(mg, langs)Some tweaks were also needed to make the grader happy and since the grader output is not that detailed and there were no local unit tests provided, it took me quite a few submissions to get this right. All-in-all, it was a fun assignment and it highlighted how much simpler R is for this type of usage.

Week 3

Content

This week focuses on partitioning and shuffling. The video lectures explain the concepts very well and even provide a practical hands-on example of how preventing shuffles can significantly improve the performance of operations on RDDs.

It also looks at optimizing Spark operations with partitioners and look at key differences between wide and narrow dependencies in the context of fail-safety. Again a concrete example is provided along with the explanations, which I find very helpful.

Assignment

There is no assignment in Week 3.

Week 4

Content

Once again an extremely useful set of sessions that introduce the DataFrame, DataSet, and Spark SQL APIs. Especially for R and Python users, this week’s content is great as the untyped APIs are those that pyspark and SparkR (and sparklyr) users will interact with the vast majority of the time. The sessions explain how these more high-level APIs relate to the typed RDD API and how the 2 main optimization tools - catalyst and tungsten work to optimize the code that users send via the high-level APIs.

There is also a benchmarking comparison of different RDD approaches that are not directly optimized so we can see performance drops versus the Spark SQL API which optimizes the SQL query such that even a query written inefficiently by the user executes very fast.

Once again, a fantastic content session to wrap up the course.

Assignment

The final assignment of the course focuses on comparing the DataSet API with the DataFrame and Spark SQL APIs in a very practical manner. Based on data on how people spend their time split across categories such as primary needs, work, and spare time activities, we compute some aggregated statistics using the untyped DataFrame and SQL APIs and the typed DataSet API. I feel this assignment really shows the differences between the APIs well in a practical sense and also allows the student to implement each of the tasks more freely.

Since I had previous experience with the DataFrame and Spark SQL APIs from working with them, I found this assignment much less challenging, but still seeing the three APIs in comparison was useful.

TL;DR - Just give me the overview

- The course introduces Apache Spark and the key concepts in a very understandable and practical way

- The feel of the course was very hands-on and well-executed, the explanations very clear, making use of practical examples

- The assignments are fun, each of them working with a real-life set of data and exploring different Spark concepts and APIs

- Overall I was very happy with the course and would love to see a more in-depth sequel